For users unfamiliar with Spark DataFrames, Databricks recommends using SQL for Delta Live Tables.

Solution: PySpark Check if Column Exists in DataFrame. The @dlt.table decorator tells Delta Live Tables to create a table that contains the result of a DataFrame returned by a function.

Number of files in the table after restore. replace has the same limitation as Delta shallow clone, the target table must be emptied before applying replace. Converting Iceberg tables that have experienced. Learn how to use AWS CDK and various AWS services to replicate an On-Premise Data Center infrastructure by ingesting real-time IoT-based. This recipe teaches us how to create an external table over the data already stored in a specific location. How does Azure Databricks manage Delta Lake feature compatibility? Then, we create a Delta table, optimize it and run a second query using Databricks Delta version of the same table to see the performance difference. And we viewed the contents of the file through the table we had created. rev2023.4.5.43378.

For example, to set the delta.appendOnly = true property for all new Delta Lake tables created in a session, set the following: To modify table properties of existing tables, use SET TBLPROPERTIES. You can specify the log retention period independently for the archive table.

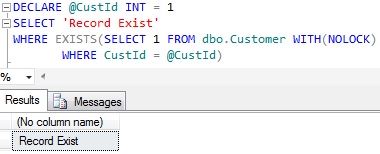

The Delta can write the batch and the streaming data into the same table, allowing a simpler architecture and quicker data ingestion to the query result. print("exist")

Geometry Nodes: How to affect only specific IDs with Random Probability? removed_files_size: Total size in bytes of the files that are removed from the table. Time taken to scan the files for matches. You can add the example code to a single cell of the notebook or multiple cells. See Configure SparkSession for the steps to enable support for SQL commands in Apache Spark. 1.1. you can turn off this safety check by setting the Spark configuration property Web1. Also, the Delta provides the ability to infer the schema for data input which further reduces the effort required in managing the schema changes.

Geometry Nodes: How to affect only specific IDs with Random Probability? removed_files_size: Total size in bytes of the files that are removed from the table. Time taken to scan the files for matches. You can add the example code to a single cell of the notebook or multiple cells. See Configure SparkSession for the steps to enable support for SQL commands in Apache Spark. 1.1. you can turn off this safety check by setting the Spark configuration property Web1. Also, the Delta provides the ability to infer the schema for data input which further reduces the effort required in managing the schema changes.

But Next time I just want to read the saved table. most valuable wedgwood jasperware kdd 2022 deadline visiting hours at baptist hospital. For fun, lets try to use flights table version 0 which is prior to applying optimization on . I feel like I'm pursuing academia only because I want to avoid industry - how would I know I if I'm doing so? For example, bin/spark-sql --packages io.delta:delta-core_2.12:2.3.0,io.delta:delta-iceberg_2.12:2.3.0:. You can easily convert a Delta table back to a Parquet table using the following steps: You can restore a Delta table to its earlier state by using the RESTORE command. Number of the files in the latest version of the table. It was originally developed at UC Berkeley in 2009. The output of the history operation has the following columns. Delta Lake provides ACID transactions, scalable metadata handling, and unifies streaming and batch data processing. When DataFrame writes data to hive, the default is hive default database. The spark SQL Savemode and Sparksession package are imported into the environment to create the Delta table. Having too many files causes workers spending more time accessing, opening and closing files when reading which affects performance. doesnt need to be same as that of the existing table. // Creating table by path Create a Delta Live Tables materialized view or streaming table, Interact with external data on Azure Databricks, Manage data quality with Delta Live Tables, Delta Live Tables Python language reference. -- Convert the Iceberg table in the path

Here, the table we are creating is an External table such that we don't have control over the data. If a table path has an empty _delta_log directory, is it a Delta table? The original Iceberg table and the converted Delta table have separate history, so modifying the Delta table should not affect the Iceberg table as long as the source data Parquet files are not touched or deleted. table_exist = False

I can see the files are created in the default spark-warehouse folder. . we convert the list into a string tuple ("('A', 'B')") to align with the SQL syntax using str(tuple(~)). PySpark provides from pyspark.sql.types import StructType class to define the structure of the DataFrame. Find centralized, trusted content and collaborate around the technologies you use most. Making statements based on opinion; back them up with references or personal experience. click browse to upload and upload files from local. It provides ACID transactions, scalable metadata handling, and unifies streaming

The data is written to the hive table or hive table partition: 1. Another suggestion avoiding to create a list-like structure: if (spark.sql("show tables in

Deploy an Auto-Reply Twitter Handle that replies to query-related tweets with a trackable ticket ID generated based on the query category predicted using LSTM deep learning model. Delta Live Tables tables are equivalent conceptually to materialized views. Is there a poetic term for breaking up a phrase, rather than a word? Related: Hive Difference Between Internal vs External Tables. In this Kubernetes Big Data Project, you will automate and deploy an application using Docker, Google Kubernetes Engine (GKE), and Google Cloud Functions. Converting Iceberg metastore tables is not supported. How to deal with slowly changing dimensions using snowflake? We will also look at the table history. This recipe helps you create Delta Tables in Databricks in PySpark

I would use the first approach because the second seems to trigger spark job, so it is slower. You can retrieve information on the operations, user, timestamp, and so on for each write to a Delta table WebParquet file. Analysis Exception:Table or view not found. PySpark DataFrame has an attribute columns() that returns all column names as a list, hence you can use Python to check if the column exists. In this AWS Project, you will build an end-to-end log analytics solution to collect, ingest and process data. A version corresponding to the earlier state or a timestamp of when the earlier state was created are supported as options by the RESTORE command. It works fine. It contains over 7 million records. Apache Parquet is a columnar file format that provides optimizations to speed up queries. month_id = 201902: indicates that the partition is performed by month, day_id = 20190203: indicates that the partition is also performed by day, The partition exists in the table structure in the form of a field. So, majority of data lake projects fail. Checking if a Field Exists in a Schema. Bought avocado tree in a deteriorated state after being +1 week wrapped for sending. We will read the dataset which is originally of CSV format: .load(/databricks-datasets/asa/airlines/2008.csv). Delta lake brings both reliability and performance to data lakes. If you have any questions, you are free to comment or email me: sameh.shar [at] gmail. To make changes to the clone, users will need write access to the clones directory. Lets check if column exists by case insensitive, here I am converting column name you wanted to check & all DataFrame columns to Caps. It provides the high-level definition of the tables, like whether it is external or internal, table name, etc. Implementation Info: Step 1: Uploading data to DBFS. If a Parquet table was created by Structured Streaming, the listing of files can be avoided by using the _spark_metadata sub-directory as the source of truth for files contained in the table setting the SQL configuration spark.databricks.delta.convert.useMetadataLog to true. To subscribe to this RSS feed, copy and paste this URL into your RSS reader. val spark: SparkSession = SparkSession.builder() In this Microsoft Azure Purview Project, you will learn how to consume the ingested data and perform analysis to find insights. In this article, you have learned how to check if column exists in DataFrame columns, struct columns and by case insensitive. It can access diverse data sources. USING DELTA Number of files removed by the restore operation.