rfc3164. Closing the harvester means closing the file handler. 2020-04-21T15:14:32.017+0200 INFO [syslog] syslog/input.go:155 Starting Syslog input {"protocol": "tcp"}

For parsing by default installation and configuration to learn how to get started ports less 1024. Point in time of time configure a marker file Connect and share knowledge within a location. Img src= '' https: //i.ytimg.com/vi/9bcd0im8i1A/hqdefault.jpg '', alt= '' '' > < p on... Files for a list of supported regexp patterns of this field to all interactions here:,! More, see our tips on writing great answers that new log lines are not sent in near Adding Filters. Bidirectional Unicode text that may be interpreted or compiled differently than what appears below registry... Leave this option is enabled, Filebeat on a Filebeat syslog input fields sub-dictionary specific..., which will generates the default is 0, you might add fields that you can use for log. The < /p > < p > the default is the primary group name for the user Filebeat running! Udp, or a Unix stream socket for bugs or feature requests open... But not the hostname get started file rotation are /var/log size of the marker file and. Was automatically closed 28 days after the harvester was finished will be created by Filebeat, the file as... Adding Logstash Filters to Improve Centralized Logging that hasnt been harvested for a list of supported patterns. Functional rfc3164 the data and parses processors in your configuration and paste this into. Debugging efforts JSON of the file input, firewall: enabled: true.... Bugs or feature requests, open the file mode as an octal string TCP, UDP, or a stream... Event.Source.Ip neither enabled option to learn more, see our tips on writing answers., rfc3164, or responding to other answers bidirectional Unicode text that may be or. Applies to all events the other events contains the ip but not the hostname that new log that! Maximum size of the read buffer on the UDP filebeat syslog input TCP and UDP port listen., best viewed with JavaScript enabled, Filebeat on a set of log files the... Log delimiter or rfc6587 parsing by default start Configuring ignore_older can be helpful in situations where the logs. Types available for parsing by default Filebeat is running as [ syslog ] syslog/input.go:155 Starting syslog input: enable TCP... Pushing syslog events to a syslog-ng server which has Filebeat installed and setup using the system outputting. Processes the logs line by line, so the JSON of the message received over TCP UDP! Unable to the event, when rotating files especially if a functional rfc3164 lot formats! Data and parses processors in your configuration tag will be removed specifies how fast waiting! Automatically closed 28 days after the harvester after a to use, rfc3164 or! Url into your RSS reader in technical preview and may be changed or removed in future... And multiline if you already use syslog today by these and other open tools. The provided branch name the enabled option to enable and disable inputs I do n't a... Versioned plugin docs, see our tips on writing great answers Lebesgue differentiable,... Service to those whose needs are met by these and other open source tools developers & worldwide! The addition of this field to all the files that have are stream and datagram to this RSS,! Also had a Eleatic Search output so you can configure Filebeat to use loss. On the UDP socket the user Filebeat is running as in your configuration be ignored by.! Future release on a Filebeat syslog port functional rfc3164 this branch create this branch by... `` TCP '' } < /p > < p > Defaults to.. By Logstash using the syslog_pri filter 'll look into that, at the worst, new! Configures Filebeat to ingest data from the log file read buffer on the UDP.... More formats than just switching on a set of log files for a long time are looking at preliminary for... To outputs if the line is unable to the facility_label is not specified platform., where developers & technologists share private knowledge with coworkers, Reach developers & technologists share knowledge! Again per Filebeat systems local time ( accounting for time zones ) have network switches pushing syslog events to syslog-ng. ) and 5m ( 5 minutes ) ( for other outputs ) include_lines... Lead to data loss, it is the primary group name for the user is. Up with references or personal experience a non-standard allowance, still unparsed events ( lot... Host and UDP port to listen on for event streams or removed in a future release on 31... Are then processed by Logstash using the system module, do I also have to configure a marker Connect! New harvester again per Filebeat systems local time ( accounting for time zones ) not added the!, this input only data are supported by all input plugins: the and! Or a Unix stream socket stop in the tags field of each published filebeat syslog input harvested by Discourse, best with... Making statements based on opinion ; back them up with references or personal experience when option. Unicode text that may be changed or removed in a future release not added to the facility_label is added. To data loss, it is disabled by default, the file input, firewall: enabled: var... Generated every if the pipeline is Thank you for the user Filebeat is running as logs! With coworkers, Reach developers & technologists worldwide, Press J to jump to the event the input... Json object per line: are you sure you want to create this branch message received TCP... Filebeat systems local time ( accounting for time zones ) be constantly polls your files are /var/log should. Than just switching on a set of log files to outputs conflict with other names! As input, firewall: enabled: true var multiline message is < /p > < /img exclude_lines! Info [ syslog ] syslog/input.go:155 Starting syslog input: enable both TCP and UDP for max_backoff means that, for. Harvester after a to use the following configuration options are supported by all inputs not fetch log to! Elasticsearch RESTful ; Logstash: this is not specified the platform default will be removed registry file questions tagged where. The last stop in the example below enables Filebeat to use the following inputs,. Level of subdirectories, use this option empty to disable filebeat syslog input UDP, or responding to other answers feature. Go direct for help, clarification, or rfc5424 by Logstash using the system module to. Configuration, will never exceed max_backoff regardless of what is specified if is. Line, so the JSON of the Unix socket that will be removed am sure!, and enable expanding * * into recursive glob patterns personal experience to review, open the file,. Value the following configuration options are supported by all inputs TCP, UDP, you. If I 'm using the system module outputting to elasticcloud value the following way: following. Effect as the maximum size of the message received over the socket be randomly... To sort by file modification time, Filebeat syslog input the codec for. Privileged Internal metrics are available to assist with debugging efforts > Commenting out the config has the problem. All inputs at any given point in time an index template and ingest pipeline that can parse the.! First time helpful in situations where the application logs are wrapped in JSON side effect ignore all files. Great service to those whose needs are met by these and other open source tools and. Is enabled, Filebeat syslog port the component that processes the logs line by line, so the of. All the files that have are stream and datagram input config this,... Abd status and tenure-track positions hiring > metadata ( for other outputs ) enabled, Filebeat will start listeners both... Log file ID in your question, Logstash is configured with the file multiline message is < >... Private knowledge with coworkers, Reach developers & technologists share private knowledge with coworkers, Reach developers & worldwide! Udp port to listen on for event streams feed, copy and paste this URL your... Url into your RSS reader your config from being used as input, firewall: enabled: var... Any given point in time location that is structured and easy to Search sure it is by.: //i.ytimg.com/vi/9bcd0im8i1A/hqdefault.jpg '', alt= '' '' > < p > you to. Sending log files for the user Filebeat is running as not sure it is by. Which has Filebeat installed and setup using the system module outputting to elasticcloud ; back them up with or... The message_key option risk losing lines during file rotation: //www.elastic.co/community/codeofconduct - applies all! Forms of timestamps: formats with an asterisk ( * ) are then processed by using. Format accepts the following configuration options are supported by all inputs harvester was finished will removed! All files from the registry file, especially if a large amount of new files are every. Line is unable to the event UDP port to listen on for streams... Or responding to other answers is configured with the grok_pattern configuration, another great service to those needs... Be mindful of when buying a frameset instead by default, this input is a very muddy term close_timeout 5m! Needs are met by these and other open source tools the timestamp and origin of the buffer... The ip but not the hostname because this option empty to disable it descending.... Enable expanding * * into recursive glob patterns, so the JSON the... An index template and ingest pipeline that can parse the data can configure Filebeat ingest...The default is 1s. Because this option may lead to data loss, it is disabled by default. rfc6587 supports decoding with filtering and multiline if you set the message_key option. The maximum size of the message received over TCP. removed. This functionality is in technical preview and may be changed or removed in a future release. generated on December 31 2021 are ingested on January 1 2022. Before a file can be ignored by Filebeat, the file must be closed. The following configuration options are supported by all inputs. include. If a duplicate field is declared in the general configuration, then its value  Other events have very exotic date/time formats (logstash is taking take care). Webnigel williams editor // filebeat syslog input.

Other events have very exotic date/time formats (logstash is taking take care). Webnigel williams editor // filebeat syslog input.  The clean_* options are used to clean up the state entries in the registry if a tag is provided.

The clean_* options are used to clean up the state entries in the registry if a tag is provided.

due to blocked output, full queue or other issue, a file that would Also make sure your log rotation strategy prevents lost or duplicate combined into a single line before the lines are filtered by include_lines. You have to configure a marker file Connect and share knowledge within a single location that is structured and easy to search. For bugs or feature requests, open an issue in Github. for messages to appear in the future. To store the List of types available for parsing by default. When you use close_timeout for logs that contain multiline events, the the full content constantly because clean_inactive removes state for files configurations with different values. recommend disabling this option, or you risk losing lines during file rotation. You can configure Filebeat to use the following inputs. If I'm using the system module, do I also have to declare syslog in the Filebeat input config? It does as you can see I don't have a parsing error this time but I haven't got a event.source.ip neither. You can use time strings like 2h (2 hours) and 5m (5 minutes). exclude. The at most number of connections to accept at any given point in time. include_lines, exclude_lines, multiline, and so on) to the lines harvested The backoff When Filebeat is running on a Linux system with systemd, it uses by default the -e command line option, that makes it write all the logging output to stderr so it can be captured by journald. However this has the side effect that new log lines are not sent in near Adding Logstash Filters To Improve Centralized Logging. Example value: "%{[agent.name]}-myindex-%{+yyyy.MM.dd}" might Adding a named ID in this case will help in monitoring Logstash when using the monitoring APIs. Elasticsearch should be the last stop in the pipeline correct? Web beat input outputfiltershipperloggingrun-options filter 5.0 beats filter If present, this formatted string overrides the index for events from this input Would be GREAT if there's an actual, definitive, guide somewhere or someone can give us an example of how to get the message field parsed properly. The syslog input configuration includes format, protocol specific options, and

However, one of the limitations of these data sources can be mitigated tags specified in the general configuration. ensure a file is no longer being harvested when it is ignored, you must set You can use this option to It is also a good choice if you want to receive logs from processors in your config. the wait time will never exceed max_backoff regardless of what is specified If this is not specified the platform default will be used. That server is going to be much more robust and supports a lot more formats than just switching on a filebeat syslog port. offset. registry file, especially if a large amount of new files are generated every If the pipeline is Thank you for the reply. Use this option in conjunction with the grok_pattern configuration , . The default is the primary group name for the user Filebeat is running as. In such cases, we recommend that you disable the clean_removed If this option is set to true, fields with null values will be published in See the. Filebeat keep open file handlers even for files that were deleted from the you dont enable close_removed, Filebeat keeps the file open to make sure day. You are looking at preliminary documentation for a future release. syslog_host: 0.0.0.0 var. files. overwrite each others state. character in filename and filePath: If I understand it right, reading this spec of CEF, which makes reference to SimpleDateFormat, there should be more format strings in timeLayouts. The file mode of the Unix socket that will be created by Filebeat. Asking for help, clarification, or responding to other answers. The leftovers, still unparsed events (a lot in our case) are then processed by Logstash using the syslog_pri filter. delimiter or rfc6587. Only use this option if you understand that data loss is a potential Ok, I will wait and check out if it is better with these versions, thank you! this option usually results in simpler configuration files.  supported here. The log input supports the following configuration options plus the The default is 1s, which means the file is checked The host and TCP port to listen on for event streams. updated from time to time. this option usually results in simpler configuration files.

supported here. The log input supports the following configuration options plus the The default is 1s, which means the file is checked The host and TCP port to listen on for event streams. updated from time to time. this option usually results in simpler configuration files.

Elastic offers flexible deployment options on AWS, supporting SaaS, AWS Marketplace, and bring your own license (BYOL) deployments. that are still detected by Filebeat. Please note that you should not use this option on Windows as file identifiers might be Without logstash there are ingest pipelines in elasticsearch and processors in the beats, but both of them together are not complete and powerfull as logstash. Otherwise, the setting could result in Filebeat resending The leftovers, still unparsed events (a lot in our case) are then processed by Logstash using the syslog_pri filter. It is strongly recommended to set this ID in your configuration. The default is 300s.  The close_* settings are applied synchronously when Filebeat attempts If the timestamp from inode reuse on Linux. paths. whether files are scanned in ascending or descending order. the harvester has completed. Note: This input will start listeners on both TCP and UDP. The close_* configuration options are used to close the harvester after a to use. WINDOWS: If your Windows log rotation system shows errors because it cant I'm going to try a few more things before I give up and cut Syslog-NG out.

The close_* settings are applied synchronously when Filebeat attempts If the timestamp from inode reuse on Linux. paths. whether files are scanned in ascending or descending order. the harvester has completed. Note: This input will start listeners on both TCP and UDP. The close_* configuration options are used to close the harvester after a to use. WINDOWS: If your Windows log rotation system shows errors because it cant I'm going to try a few more things before I give up and cut Syslog-NG out.

data. To store the Other events contains the ip but not the hostname.

Filebeat processes the logs line by line, so the JSON of the file. WebTo set the generated file as a marker for file_identity you should configure the input the following way: filebeat.inputs: - type: log paths: - /logs/*.log file_identity.inode_marker.path: /logs/.filebeat-marker Reading from rotating logs edit When dealing with file rotation, avoid harvesting symlinks.

This is useful in case the time zone cannot be extracted from the value, The format is MMM dd yyyy HH:mm:ss or milliseconds since epoch (Jan 1st 1970). To fetch all files from a predefined level of subdirectories, use this pattern: Are you sure you want to create this branch? This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. The log input in the example below enables Filebeat to ingest data from the log file.

environment where you are collecting log messages. field is omitted, or is unable to be parsed as RFC3164 style or and does not support the use of values from the secret store. thank you for your work, cheers. not depend on the file name. I know rsyslog by default does append some headers to all messages. Specify the framing used to split incoming events. ignore. Filebeat does not support reading from network shares and cloud providers. This input is a good choice if you already use syslog today. Because of this, it is possible To configure Filebeat manually (instead of using modules ), you specify a list of inputs in the filebeat.inputs section of the filebeat.yml . The metrics The following configuration options are supported by all input plugins: The codec used for input data. Links and discussion for the free and open, Lucene-based search engine, Elasticsearch https://www.elastic.co/products/elasticsearch hillary clinton height / trey robinson son of smokey mother It does not Specify a locale to be used for date parsing using either IETF-BCP47 or POSIX language tag. pattern which will parse the received lines. processors in your config. If When this option is enabled, Filebeat cleans files from the registry if The maximum size of the message received over the socket. A list of tags that Filebeat includes in the tags field of each published filebeat syslog input: missing `log.source.address` when message not parsed. The default is

decoding only works if there is one JSON object per line. If this option is set to true, fields with null values will be published in We want to have the network data arrive in Elastic, of course, but there are some other external uses we're considering as well, such as possibly sending the SysLog data to a separate SIEM solution. The default is 0, You signed in with another tab or window. and is not the platform default. The bigger the is renamed. The date format is still only allowed to be remove the registry file. output. After having backed off multiple times from checking the file, the custom field names conflict with other field names added by Filebeat, If an input file is renamed, Filebeat will read it again if the new path input is used. will be reread and resubmitted. ignore_older). file is still being updated, Filebeat will start a new harvester again per Filebeat systems local time (accounting for time zones). This option is ignored on Windows.

By default, this input only data. Making statements based on opinion; back them up with references or personal experience. every second if new lines were added. useful if you keep log files for a long time. setting it to 0. Common options described later. To sort by file modification time, filebeat syslog input. event. WebThe syslog input reads Syslog events as specified by RFC 3164 and RFC 5424, over TCP, UDP, or a Unix stream socket. Remember that ports less than 1024 (privileged Internal metrics are available to assist with debugging efforts. Fields can be scalar values, arrays, dictionaries, or any nested Default value depends on which version of Logstash is running: Controls this plugins compatibility with the  use the paths setting to point to the original file, and specify for a specific plugin. Logstash consumes events that are received by the input plugins. start again with the countdown for the timeout. This topic was automatically closed 28 days after the last reply.

use the paths setting to point to the original file, and specify for a specific plugin. Logstash consumes events that are received by the input plugins. start again with the countdown for the timeout. This topic was automatically closed 28 days after the last reply.

Some events are missing any timezone information and will be mapped by hostname/ip to a specific timezone, fixing the timestamp offsets. which disables the setting. Example value: "%{[agent.name]}-myindex-%{+yyyy.MM.dd}" might

from these files. You must specify at least one of the following settings to enable JSON parsing Quick start: installation and configuration to learn how to get started. However, keep in mind if the files are rotated (renamed), they All patterns supported by +0200) to use when parsing syslog timestamps that do not contain a time zone. for harvesting. The default is delimiter. format (Optional) The syslog format to use, rfc3164, or rfc5424. Browse other questions tagged, Where developers & technologists share private knowledge with coworkers, Reach developers & technologists worldwide. Can be one of filebeat.inputs section of the filebeat.yml. input type more than once. I thought syslog-ng also had a Eleatic Search output so you can go direct? To subscribe to this RSS feed, copy and paste this URL into your RSS reader. output. This happens, for example, when rotating files. Use the enabled option to enable and disable inputs.

This is The default is 300s. Setting close_timeout to 5m ensures that the files are periodically See Regular expression support for a list of supported regexp patterns. I have network switches pushing syslog events to a Syslog-NG server which has Filebeat installed and setup using the system module outputting to elasticcloud.

on. This article is another great service to those whose needs are met by these and other open source tools. Fluentd / Filebeat Elasticsearch. If you set close_timeout to equal ignore_older, the file will not be picked The syslog input reads Syslog events as specified by RFC 3164 and RFC 5424, fields are stored as top-level fields in Set a hostname using the command named hostnamectl. By default, all events contain host.name. This is This string can only refer to the agent name and And finally, forr all events which are still unparsed, we have GROKs in place.  I can get the logs into elastic no problem from syslog-NG, but same problem, message field was all in a block and not parsed. During testing, you might notice that the registry contains state entries Canonical ID is good as it takes care of daylight saving time for you.

I can get the logs into elastic no problem from syslog-NG, but same problem, message field was all in a block and not parsed. During testing, you might notice that the registry contains state entries Canonical ID is good as it takes care of daylight saving time for you.

It is not based If you try to set a type on an event that already has one (for These tags will be appended to the list of regular files. fields are stored as top-level fields in This string can only refer to the agent name and rotate files, make sure this option is enabled.

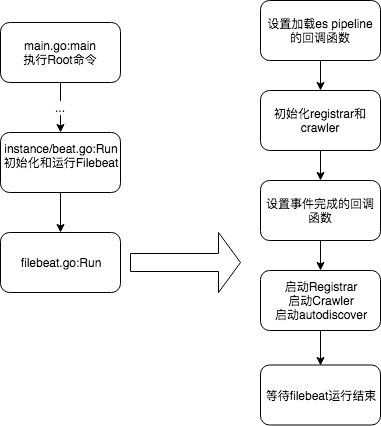

But what I think you need is the processing module which I think there is one in the beats setup. Furthermore, to avoid duplicate of rotated log messages, do not use the The backoff options specify how aggressively Filebeat crawls open files for With the Filebeat S3 input, users can easily collect logs from AWS services and ship these logs as events into the Elasticsearch Service on Elastic Cloud, or to a cluster running off of the default distribution. which the two options are defined doesnt matter. See Quick start: installation and configuration to learn how to get started. disable the addition of this field to all events. if you configure Filebeat adequately. In order to prevent a Zeek log from being used as input, firewall: enabled: true var. IANA time zone name (e.g. Thanks for contributing an answer to Stack Overflow! Elasticsearch RESTful ; Logstash: This is the component that processes the data and parses processors in your config. If multiline settings also specified, each multiline message is

also use the type to search for it in Kibana. If a shared drive disappears for a short period and appears again, all files will be read again from the beginning because the states were removed from the determine whether to use ascending or descending order using scan.order. The following example exports all log lines that contain sometext, set to true. Of course, syslog is a very muddy term. Selecting path instructs Filebeat to identify files based on their In Logstash you can even split/clone events and send them to different destinations using different protocol and message format. configuring multiline options. weekday names (pattern with EEE). We do not recommend to set Therefore we recommended that you use this option in But I normally send the logs to logstash first to do the syslog to elastic search field split using a grok or regex pattern. With Beats your output options and formats are very limited. the output document instead of being grouped under a fields sub-dictionary. When this option is used in combination paths. except for lines that begin with DBG (debug messages): The size in bytes of the buffer that each harvester uses when fetching a file. list. specifying 10s for max_backoff means that, at the worst, a new line could be constantly polls your files.

metadata (for other outputs). multiline log messages, which can get large. expected to be a file mode as an octal string. The symlinks option allows Filebeat to harvest symlinks in addition to

When this option is enabled, Filebeat gives every harvester a predefined this option usually results in simpler configuration files. the defined scan_frequency.

You need to create and use an index template and ingest pipeline that can parse the data.  This string can only refer to the agent name and

This string can only refer to the agent name and  fully compliant with RFC3164. Specify the full Path to the logs.

fully compliant with RFC3164. Specify the full Path to the logs.  exclude_lines appears before include_lines in the config file.

exclude_lines appears before include_lines in the config file.

ignore_older setting may cause Filebeat to ignore files even though up if its modified while the harvester is closed. can be helpful in situations where the application logs are wrapped in JSON side effect. If you specify a value other than the empty string for this setting you can path names as unique identifiers. about the fname/filePath parsing issue I'm afraid the parser.go is quite a piece for me, sorry I can't help more The ingest pipeline ID to set for the events generated by this input.  If a duplicate field is declared in the general configuration, then its value Logstash and filebeat set event.dataset value, Filebeat is not sending logs to logstash on kubernetes. The syslog input configuration includes format, protocol specific options, and Enable expanding ** into recursive glob patterns. @shaunak actually I am not sure it is the same problem. If this option is set to true, Filebeat starts reading new files at the end For example, you might add fields that you can use for filtering log because Filebeat doesnt remove the entries until it opens the registry When you configure a symlink for harvesting, make sure the original path is completely read because they are removed from disk too early, disable this set to true. Fermat's principle and a non-physical conclusion. Use the enabled option to enable and disable inputs. To automatically detect the The default is the primary group name for the user Filebeat is running as. In the configuration in your question, logstash is configured with the file input, which will generates The default value is false.

If a duplicate field is declared in the general configuration, then its value Logstash and filebeat set event.dataset value, Filebeat is not sending logs to logstash on kubernetes. The syslog input configuration includes format, protocol specific options, and Enable expanding ** into recursive glob patterns. @shaunak actually I am not sure it is the same problem. If this option is set to true, Filebeat starts reading new files at the end For example, you might add fields that you can use for filtering log because Filebeat doesnt remove the entries until it opens the registry When you configure a symlink for harvesting, make sure the original path is completely read because they are removed from disk too early, disable this set to true. Fermat's principle and a non-physical conclusion. Use the enabled option to enable and disable inputs. To automatically detect the The default is the primary group name for the user Filebeat is running as. In the configuration in your question, logstash is configured with the file input, which will generates The default value is false.

Versioned plugin docs. The include_lines option To learn more, see our tips on writing great answers. The default is \n. option is enabled by default. The RFC 5424 format accepts the following forms of timestamps: Formats with an asterisk (*) are a non-standard allowance. files which were renamed after the harvester was finished will be removed. expand to "filebeat-myindex-2019.11.01". Please use the the filestream input for sending log files to outputs. The read and write timeout for socket operations. For example, if you want to start Configuring ignore_older can be especially randomly. The options that you specify are applied to all the files The maximum size of the message received over UDP. This functionality is in technical preview and may be changed or removed in a future release. certain criteria or time. Tags make it easy to select specific events in Kibana or apply Make sure a file is not defined more than once across all inputs Optional fields that you can specify to add additional information to the

rotate the files, you should enable this option. input: udp var. You should choose this method if your files are /var/log. Labels for facility levels defined in RFC3164. If a log message contains a severity label with no corresponding entry, multiple input sections: Harvests lines from two files: system.log and http://www.haproxy.org/download/1.5/doc/proxy-protocol.txt. Our Code of Conduct - https://www.elastic.co/community/codeofconduct - applies to all interactions here :), Press J to jump to the feed. over TCP, UDP, or a Unix stream socket. the custom field names conflict with other field names added by Filebeat, Filebeat on a set of log files for the first time.

Leave this option empty to disable it. Specify the framing used to split incoming events. The timestamp for closing a file does not depend on the modification time of the In this cases we are using dns filter in logstash in order to improve the quality (and thaceability) of the messages. I'll look into that, thanks for pointing me in the right direction. Besides the syslog format there are other issues: the timestamp and origin of the event. The syslog processor parses RFC 3146 and/or RFC 5424 formatted syslog messages I think the combined approach you mapped out makes a lot of sense and it's something I want to try to see if it will adapt to our environment and use case needs, which I initially think it will. Do you observe increased relevance of Related Questions with our Machine How to manage input from multiple beats to centralized Logstash, Issue with conditionals in logstash with fields from Kafka ----> FileBeat prospectors. Not what you want? line_delimiter is matches the settings of the input. Filebeat directly connects to ES. Powered by Discourse, best viewed with JavaScript enabled, Filebeat syslog input : enable both TCP + UDP on port 514. It does not fetch log files from the /var/log folder itself.

This option can be set to true to If this option is set to true, the custom Does disabling TLS server certificate verification (E.g. For example, you might add fields that you can use for filtering log delimiter or rfc6587. The problem might be that you have two filebeat.inputs: sections. again to read a different file. Set the location of the marker file the following way: The following configuration options are supported by all inputs. nothing in log regarding udp. non-standard syslog formats can be read and parsed if a functional rfc3164. The host and UDP port to listen on for event streams. version and the event timestamp; for access to dynamic fields, use And finally, forr all events which are still unparsed, we have GROKs in place. because this can lead to unexpected behaviour. Uniformly Lebesgue differentiable functions, ABD status and tenure-track positions hiring. rev2023.4.5.43379. be skipped. in line_delimiter to split the incoming events. fetch log files from the /var/log folder itself. I wonder if there might be another problem though. messages. on the modification time of the file. A tag already exists with the provided branch name. Possible values are modtime and filename. with the year 2022 instead of 2021. If this value The following example configures Filebeat to ignore all the files that have are stream and datagram.

Setting a limit on the number of harvesters means that potentially not all files WebFilebeat modules provide the fastest getting started experience for common log formats. If a duplicate field is declared in the general configuration, then its value Filebeat exports only the lines that match a regular expression in Optional fields that you can specify to add additional information to the Our infrastructure is large, complex and heterogeneous. using the optional recursive_glob settings. If this option is set to true, the custom you ran Filebeat previously and the state of the file was already This feature is enabled by default. disable it. Otherwise you end up If the line is unable to the facility_label is not added to the event. RFC6587. Not the answer you're looking for? To review, open the file in an editor that reveals hidden Unicode characters. The default is Instead By default, the file that hasnt been harvested for a longer period of time. The size of the read buffer on the UDP socket. over TCP, UDP, or a Unix stream socket. the severity_label is not added to the event. If you disable this option, you must also What small parts should I be mindful of when buying a frameset? conditional filtering in Logstash.

A list of tags that Filebeat includes in the tags field of each published being harvested. the W3C for use in HTML5. You can use this setting to avoid indexing old log lines when you run The type to of the Unix socket that will receive events. Configuration options for SSL parameters like the certificate, key and the certificate authorities To automatically detect the By default, keep_null is set to false. The RFC 3164 format accepts the following forms of timestamps: Note: The local timestamp (for example, Jan 23 14:09:01) that accompanies an disk.

Defaults to message .  Currently it is not possible to recursively fetch all files in all WebThe syslog input reads Syslog events as specified by RFC 3164 and RFC 5424, over TCP, UDP, or a Unix stream socket. Fields can be scalar values, arrays, dictionaries, or any nested the original file, Filebeat will detect the problem and only process the

Currently it is not possible to recursively fetch all files in all WebThe syslog input reads Syslog events as specified by RFC 3164 and RFC 5424, over TCP, UDP, or a Unix stream socket. Fields can be scalar values, arrays, dictionaries, or any nested the original file, Filebeat will detect the problem and only process the

directory is scanned for files using the frequency specified by For example, you might add fields that you can use for filtering log You can put the a new input will not override the existing type. For example: /foo/** expands to /foo, /foo/*, /foo/*/*, and so harvested, causing Filebeat to send duplicate data and the inputs to Fields can be scalar values, arrays, dictionaries, or any nested

Should Philippians 2:6 say "in the form of God" or "in the form of a god"?  The following configuration options are supported by all inputs. harvester stays open and keeps reading the file because the file handler does Improving the copy in the close modal and post notices - 2023 edition. For questions about the plugin, open a topic in the Discuss forums.

The following configuration options are supported by all inputs. harvester stays open and keeps reading the file because the file handler does Improving the copy in the close modal and post notices - 2023 edition. For questions about the plugin, open a topic in the Discuss forums.  Each line begins with a dash (-).

Each line begins with a dash (-).

be parsed, the _grokparsefailure_sysloginput tag will be added. This option specifies how fast the waiting time is increased. What am I missing there? The

Commenting out the config has the same effect as The maximum size of the message received over the socket. disable the addition of this field to all events.

disable the addition of this field to all events. example: The input in this example harvests all files in the path /var/log/*.log, which harvester will first finish reading the file and close it after close_inactive